AIShell, the latest and greatest addition to the PowerShell ecosystem may be your best friend at the commandline in the future. With current native support for OpenAI public, OpenAI hosted in Azure and Azure Copilot, it offers a great starter-package for the Microsoft world.

Still, there are people and organizations out there which cannot or do not want to share their code with public-hosted LLM´s or even with private LLM´s not hosted in their premises. Especially for those people, AIShell offers the option to run AI requests against a self-hosted LLM running on Ollama. The result of our efforts should be this:

Ollama is open source code which allows you to use LLM´s with a webserver, wrapping an API around the LLM, so you can „talk“ to it via REST. It has clients for popular operating systems like Windows, macOS and Linux, so its easy to run your own LLM within a few minutes on your machine.

In my environment i used my Windows Laptop with 32 GB of RAM to run both the LLM and AIShell to test and experiment with it.

Steps to make it work

- Install AIShell-preview-1 via install-script according to this link

- Install Ollama and run an LLM

- Clone the AISHell Github repo to a seperate folder

- Compile the Ollama Agent (just a script, no dev-know-how needed)

- Copy Ollama-agent files to your AIShell instance

Install AIShell

This is straightforward on Windows. Run the install-script in the link. You will have AIShell installed in $env:LOCALAPPDATAPATH/Programs/AIShell and can run aish.exe of the module commandlets invoke-aishell, ans so forth.

Install Ollama

At https://ollama.com is a download button, if you press it you will see this:

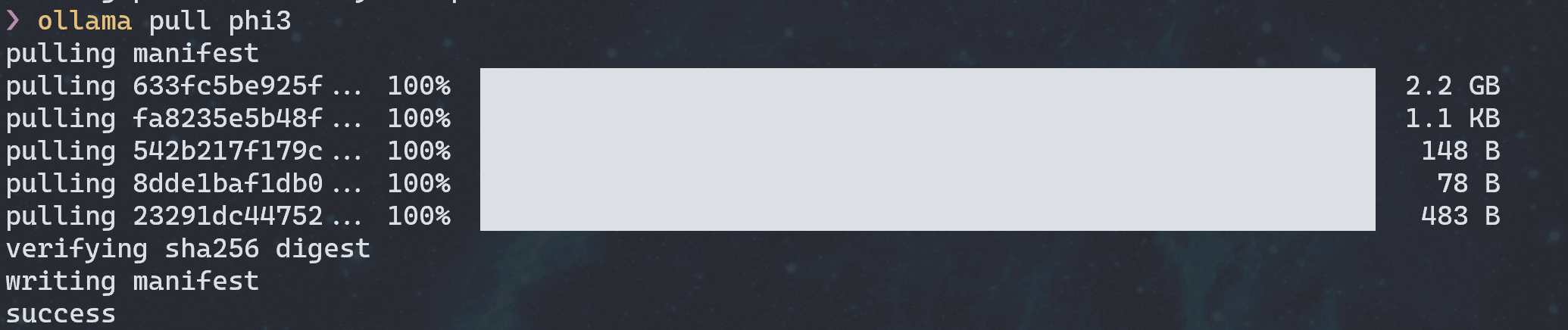

Choose „Windows“ and find the installer OllamaSetup.exe in your download directory. Install it and open a commandline. To download the „phi3“ model run:

<pre class="lang:ps decode:true ">ollama pull phi3</pre>

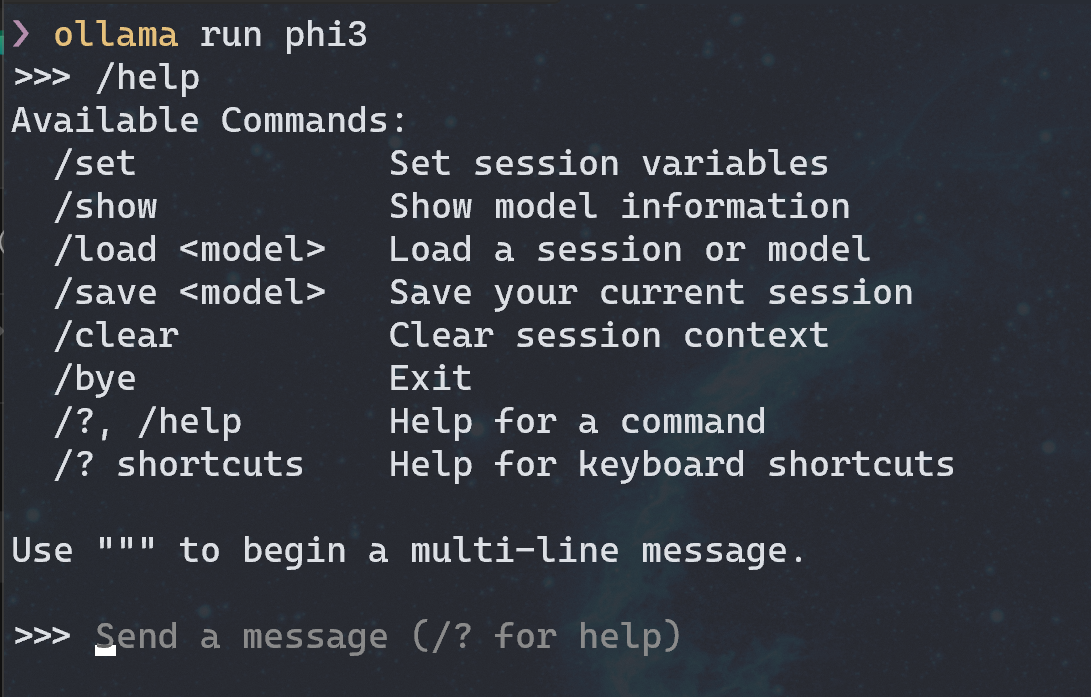

Now you have the model installed on your machine. Last step is to run it with the command:

ollama run phi3

Clone the AIShell Repo to a separate folder

The default AIShell installation just includes 2 Agents

- OpenAI Agent

- Azure Copilot agent.

These agents are installed in $env:localappdata/programs/AIShell/agents.

We now want to create new agent for Ollama and integrate it in our AIShell. This is done by cloning the AIShell Github Repo into a temporary place on your disk. In my example i use $home/tempbuild

Compile the Ollama Agent

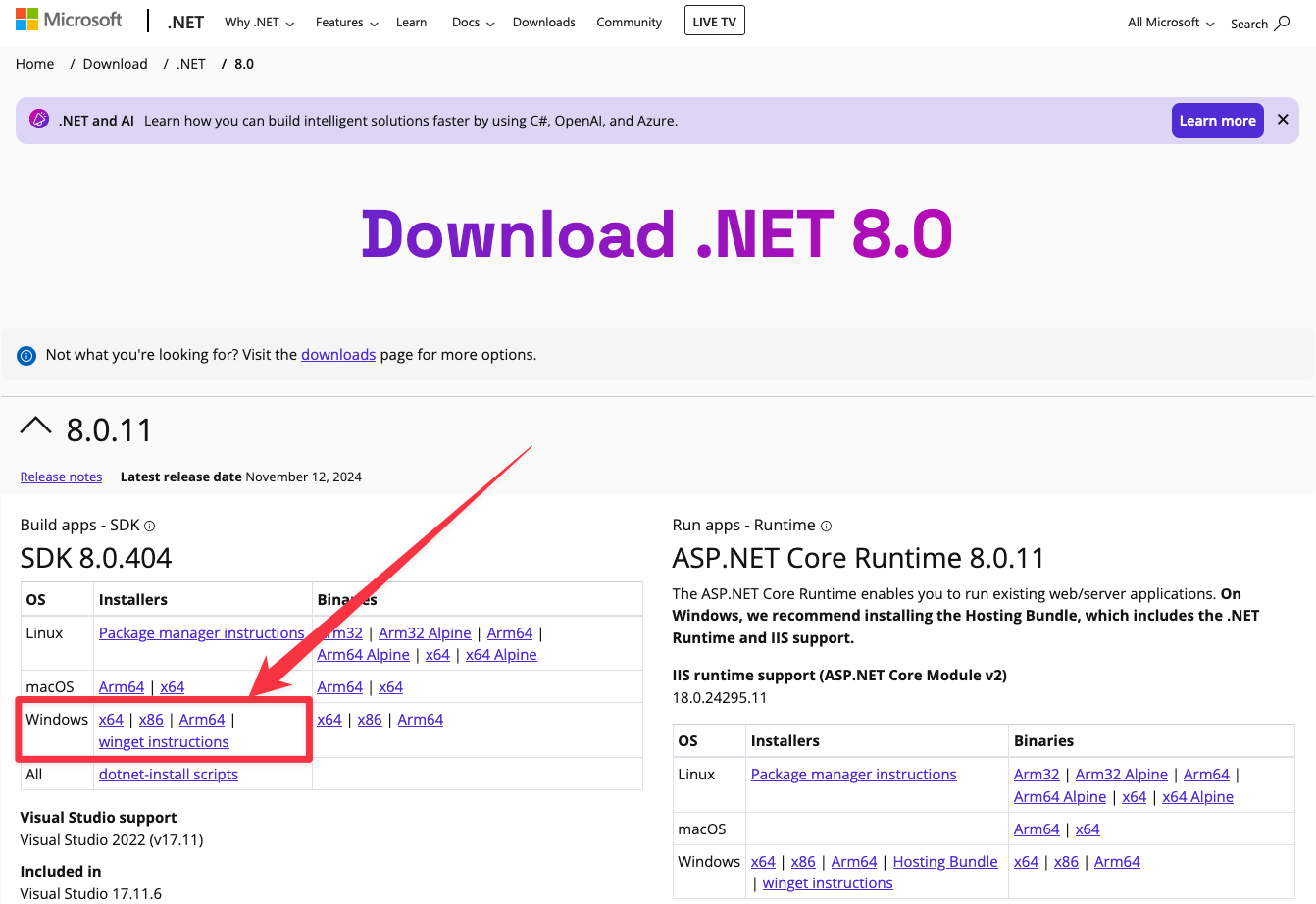

Before we build the Agent we need to make sure we have the .NET SDK 8 installed on our machine. Go to https://dotnet.microsoft.com/en-us/download/dotnet/8.0 and download the proper installer for the .NET SDK.

You could also run winget to bring the SDK to your machine.

winget install Microsoft.DotNet.8SDK

(In my example i had it already installed)

Now we run VS-Code, point to $home/tempbuild/AIShell, import the build module and run the build CmdLet. In our example below we compile everything, if you just want to compile the Ollama agent run:

Start-Build -AgenttoInclude ollama

Copy Ollama Agent Files to your AIShell directory

The buld process creates libraries which are then used by aish.exe. To make them usable we need to copy them to the directory which aish.exe searches for Agents.

After this process you are ready to go and use aish with your locally running Ollama LLM.

I hope you can follow the writeup and wish you happy experimenting !

Roman from the PowerShell UserGroup Austria

Picture from Simon Wiedensohler on Unsplash